I know it sounds banal, but I’d like to revisit the question: “What is conversion rate optimization.”

If you search Google, the answer seems obvious enough. The first result, from Moz, defines it as, “the systematic process of increasing the percentage of website visitors who take a desired action — be that filling out a form, becoming customers, or otherwise.”

That seems right to me.

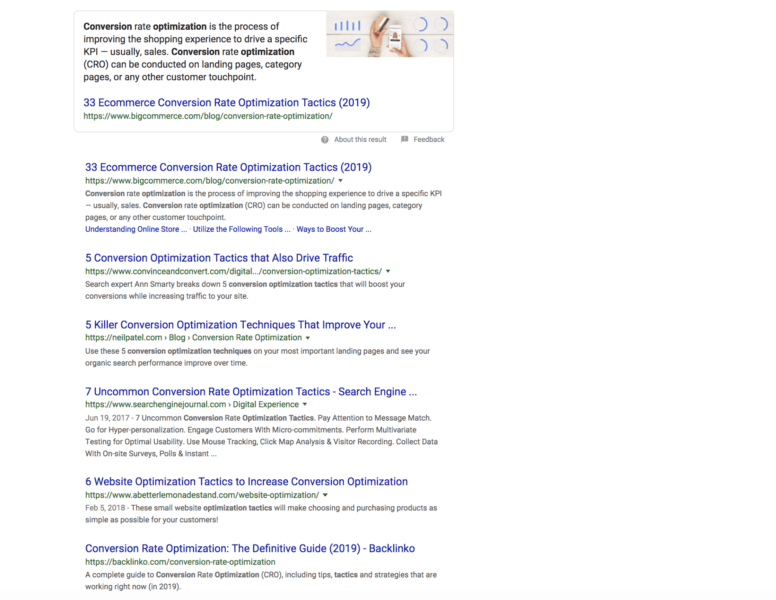

Then why do so many articles, courses, lectures and talks focus on conversion rate optimization tactics?

These articles – some of which include hundreds of tips and tactics – include advice like “use high-quality images” and “offer free shipping.”

These are assuredly good pieces of advice.

One would assume high–quality images would perform better than low-quality images (subjectiveness aside), and I’m sure customers delight in not having to pay for free shipping (though operationally, this adds some complexity).

Any single tactic or tip on this list is not in itself conversion optimization (or growth or growth hacking or experimentation or whatever word you’re using for the practice of evidence-based decision making).

For the sake of this article, I’m going to say conversion optimization and growth, by and large, are pretty much the same thing.

Growth usually encompasses product and marketing, whereas conversion optimization usually just looks at the website experience, though that seems to be a pretty minor distinguishment in the grand scheme of things. Both of these things encourage experimentation, data-driven decision making, and fast learning and iteration.

And people have a ton of “growth hacking tips” and tactics:

However, without context none of this is helpful. Would the aspiring growth hacker or conversion optimizer just run down each list and implement each thing? Test each thing individually?

CRO tactics vs. strategy (and operating systems)

The first learning from these searches is that people misunderstand tactics and strategies and use the words interchangeably.

Strategy is the “overarching plan or set of goals.” Tactics “are the specific actions or steps you undertake to accomplish your strategy.”

For instance, if it were my strategy to appear as a growth thought leader (whatever that means), one tactic in my toolbelt may be writing articles like this. Another may be doing webinars hosted on my personal website. Another might be doing local meetups.

For my role at HubSpot, I could carve out a strategy to appear at every possible organic location for bottom funnel search results. Tactically, this could mean writing listicles like our “best help desk software” article. It could also mean getting more customer reviews to lift our prominence on review sites that already appear near the top of Google for these terms.

Now, I think that CRO or growth should neither be looked at as a strategy nor as a tactic. It should be viewed as an operating system.

An operating system, removed slightly from its technical origins, defines the rules, functions, heuristics and mannerisms that control a system. In short, it’s a code (both implicit and explicit) that defines how decisions are made.

What this means practically is that a conversion optimizer or growth hacker should look much less like a vigilante ninja, complete with both a broad and simultaneously specialized skill set, who can come in and optimize a landing page or fix a referral loop.

Instead, the practice should look much more like building and maintaining infrastructure.

This is an idea inspired in part by Ed Fry (from this blog post and from several conversations). In his article, he distinguishes marketing (those who write the copy, launch the campaigns, define the brand) from growth (the scientific method, which has come a long way in marketing due to technological enablement like front-end testing tools).

He writes:

“Our observation is growth enables marketing, product, sales and other teams across the organization. It sits at an operational role, supporting multiple teams across the company, and rolls up to Operations or the CEO. This is not about managing marketing activities that have to happen every day. Growth is far more concerned about moving levers behind the sales & marketing activity instead of the functional practices of campaigns, brand, and so on.”

This is where I think CRO (or growth) thrives, particularly as a company expands in size and sophistication.

No matter how you cut it, the process usually looks something like this:

We collect data and information, put it through our proprietary growth or CRO process (made up of a unique blend of technology, processes and humans), and our output is better decisions and experiences for our users.

But why should that live within the purview of one person or even one team? What if we could enable everyone in the company to make better decisions, systematically?

Where does CRO fit into the company structure?

CRO teams tend to be either centralized or decentralized (or in growth parlance, independent or function-led).

In a centralized model, everything flows through that team, resulting in a more structured and predictable system, but can perhaps become bottlenecked if other teams want to join in. Here’s an article about the relative pros and cons of each model.

There’s a third model as well that I see more often now, particularly in large organizations with sophisticated experimentation programs: the center of excellence model.

Ronny Kohavi talked about this in an HBR article and explains it like this:

“A center of excellence focuses mostly on the design, execution, and analysis of controlled experiments. It significantly lowers the time and resources those tasks require by building a companywide experimentation platform and related tools. It can also spread best testing practices throughout the organization by hosting classes, labs, and conferences.”

In other words, if we move to a center of excellence model, CRO or growth teams can focus on building up three components of company infrastructure:

- Technical ability (tools)

- Education and best practices

- Attitudes/beliefs (culture)

CRO should support technical enablement and tooling

First and foremost, to build a company where everyone can run experiments and make better decisions, it’s important to give people the tools and technology needed to do that. I think that falls under three areas:

- Data

- Experimentation capabilities

- Knowledge sharing

To make better decisions, we need better data. A growth or CRO team can help implement, orchestrate and access the data each team needs to make better decisions.

Of course, there are a million tools on the market, ranging from the free and ubiquitous (Google Analytics) to the enterprise (Adobe Analytics) to the custom setups loved in technical organizations.

There’s no right choice for every organization, but it’s an important decision to discuss.

The second point is to decide on an experimentation framework or platform. Again, there are tons of tools available, ranging from free (Google Optimize) to enterprise to custom built.

How you set this up should have a lot to do with your organization’s technical capabilities, culture and functional needs. Echoing the above, there’s no easy answer here – but here’s a really interesting paper on how Microsoft has built their experimentation platform.

Finally, knowledge sharing is probably the most underrated. Assuming you have several teams running trustworthy experiments, delivering better experiences and getting results – the next logical piece in the puzzle is to allow archiving and communicating these results.

Education, training and best practices

The second component of infrastructure is education. If you’re going to democratize experiments, then you’ll want to make sure everyone knows how to run them.

Personally, I love the Airbnb model – they send employees through Data University to train everyone in the fundamentals.

I realize this is a heavy up-front and top-down effort, so it doesn’t need to be as robust right off the bat. Your team could simply act as an internal consultancy, holding office hours and supporting interested teams when they run experiments. Normally it takes a small ramp up period before the team or the analyst/marketer is off and running by themselves.

At the very least, documenting how to set up and analyze experiments is something that should be done. Having a resource center, or at the very least a checklist or list of guidelines like I’ve tried to put together in this article, helps people feel more comfortable running their own tests properly.

Empowering a culture of experimentation

Finally, the last component of infrastructure is the subtle and the emotional. CRO and growth teams should be cheerleaders for evidence-based decision making, experimentation and the judicious use of data in campaigns.

I’ve written a lot about building a culture of experimentation in the past and can’t say there’s any one tip or tactic or magic bullet to do it.

Often, the best way is to have a powerful and influential evangelist at the top leading the way.

Sometimes it’s built up through the bottom through consistently showing results and disseminating them through the company via Wiki posts, newsletters, and weekly experiment readouts.

This may be the most important job of the CRO or growth team, as it builds a sort of “flywheel” effect. The more excited others are about growth and experimentation, the more they’re willing to learn and improve their own skill sets, and the more evangelists you’ll have for the program – a perpetual motion device of data-driven decision making that will surely help you edge out past the competition in the long run.

Opinions expressed in this article are those of the guest author and not necessarily Marketing Land. Staff authors are listed here.