Change is a natural part of a business, particularly when it comes to your digital presence.

The need to rebrand, switch up the CMS (content management system), consolidate your resources or revamp the architecture and user journey of your website, is ultimately inevitable. And whatever the goal may be, it is not uncommon for all major initiatives to fall under the umbrella of a contemporary digital marketer.

How does Google feel about changes

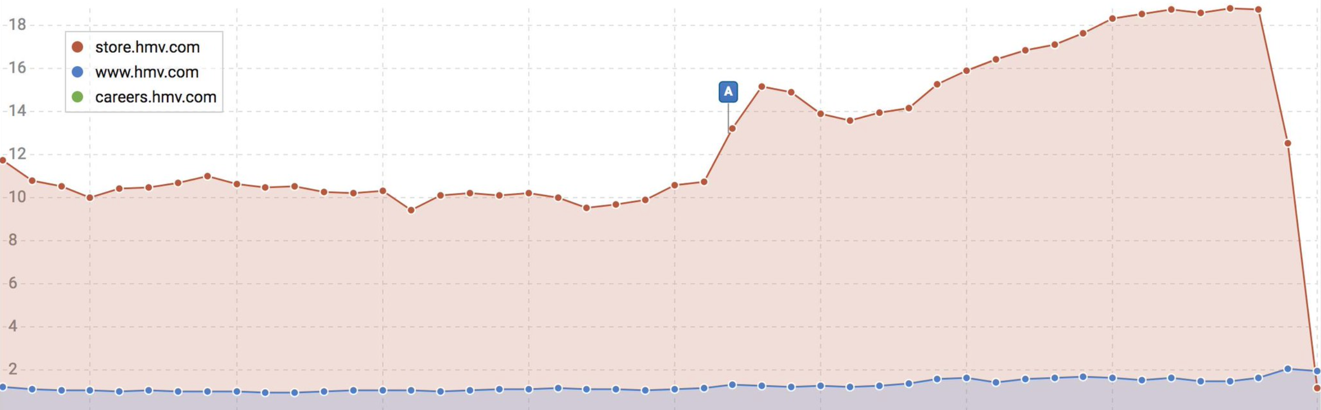

One thing to keep in mind, however, is Google’s tendency to be less than accommodating towards major website changes, especially URL changes. And who can blame them? Whilst Google’s algorithm may be able to detect semantic differences between websites, it’s somewhat unrealistic to expect it to also realize that the similarities between store.hmv.com and hmv.com mean they’re both the same brand.

Therefore, without acknowledging this, many domain changes result in staggering losses of traffic and rankings, and suddenly the most well-known brand in an industry becomes non-existent within Google’s universe. It is therefore imperative to ensure the changes you’re making can be correctly comprehended by Google.

How to understand Google

Expecting a lonesome digital marketer to be a jack of all channels is quite unrealistic. But luckily you don’t need to be. There’s a whole industry of people who are dedicating their days to figuring out how to think exactly like Google, and they can help you avoid the risk of decimating your hard-earned keyword rankings (unless you’re doing black hat tactics, in which case, those rankings aren’t very hard-earned after all). This industry is SEO.

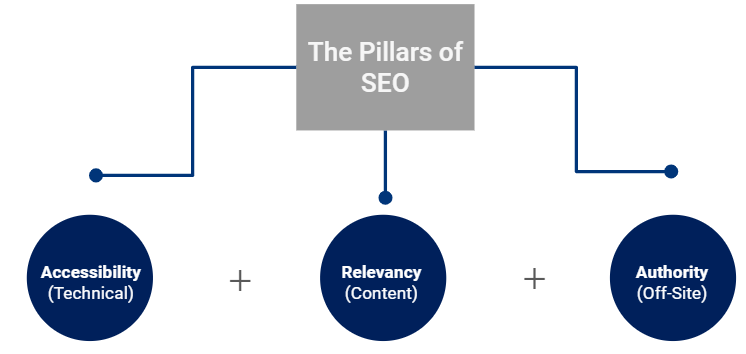

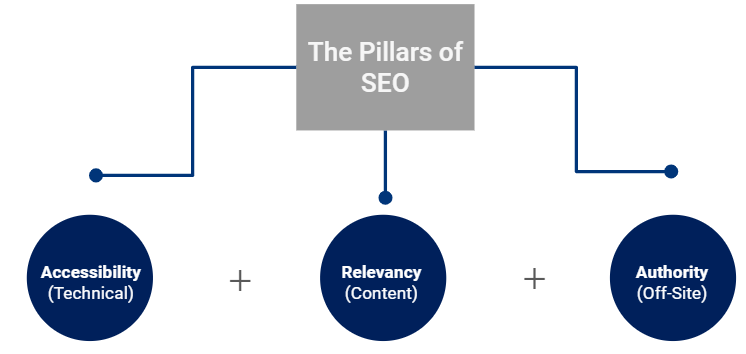

Three pillars of SEO

Before we dive into the value SEO, here’s a quick summary of the three key pillars:

- Accessibility: Technical workings of the site. This includes everything that Googlebot takes into account when understanding your site’s code. Basically, all the tags and developer language that are telling the crawlers how the site should be interpreted.

- Relevance: Content your visitors and Googlebot came for, including all of the text and metadata on your pages, blog posts, and even videos – everything your visitors see.

- Authority: Backlinks from other sites, with each one counting as a “vote” of confidence, which Google takes into account when ranking.

So with that crash course, we can now connect the dots between SEO expertise and high-level migration requirements.

Why you need SEO

Whilst a website’s appearance is important, first and foremost it’s crucial to understand how you’re going to explain the changes you’re making to Google. We suggest a handwritten note:

“Dear Google,

Don’t worry, some things are changing but we still love you, so here is a comprehensive, incredibly large map of URL redirects detailing the new versions of the exact same pages you know and ranked the first time around.”

On a more serious note, however, here are five ways in which the expertise of an SEO professional can propel your website towards successful migration.

1. Taking the complexity out of URL mapping and redirects

Since a site’s internal linking and page equity is an essential part of SEO, we deal with redirect handling and URL mapping and all the complications that come along with it, all the time. Therefore, you have to make sure each redirect makes sense, and also that each page is able to take on the new status. Common issues at this stage can include:

- Incorrectly implemented redirects (302 or the dreaded 307) that may undermine your intentions

- Extremely long or even infinite redirect loops, which will cause Google to rage-quit the page or even your entire site

- Redirects to irrelevant pages, which Google may not mind too much but will annoy your users

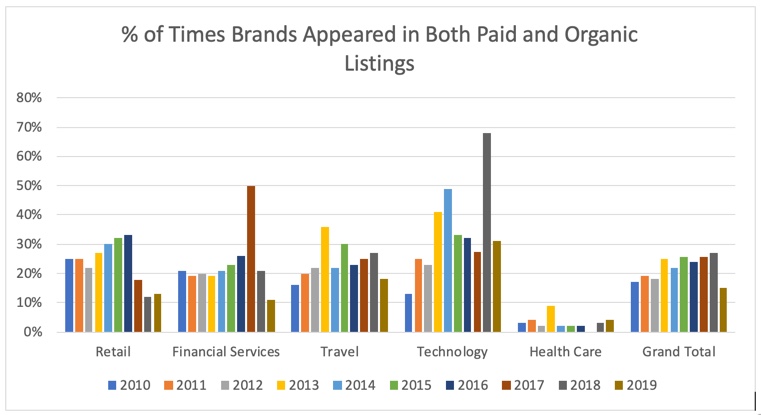

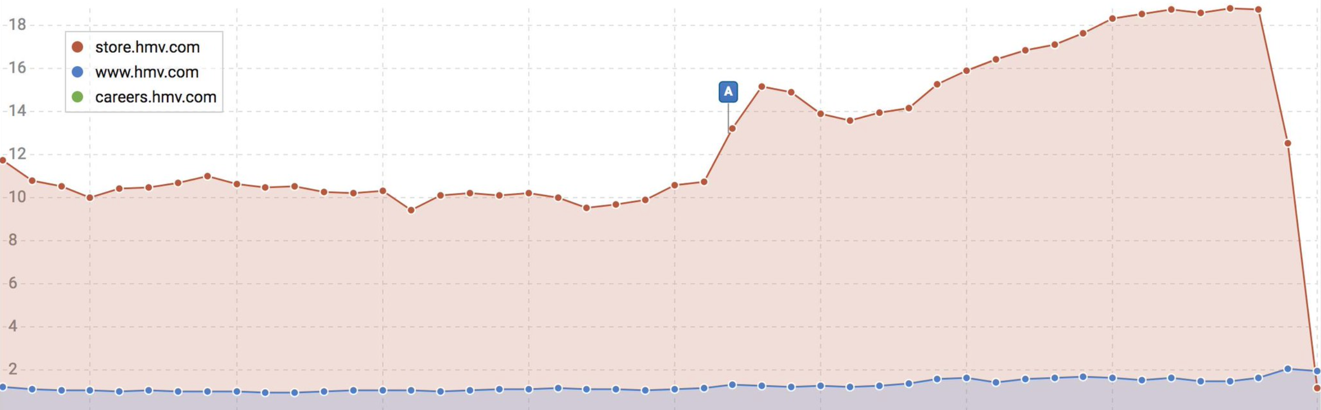

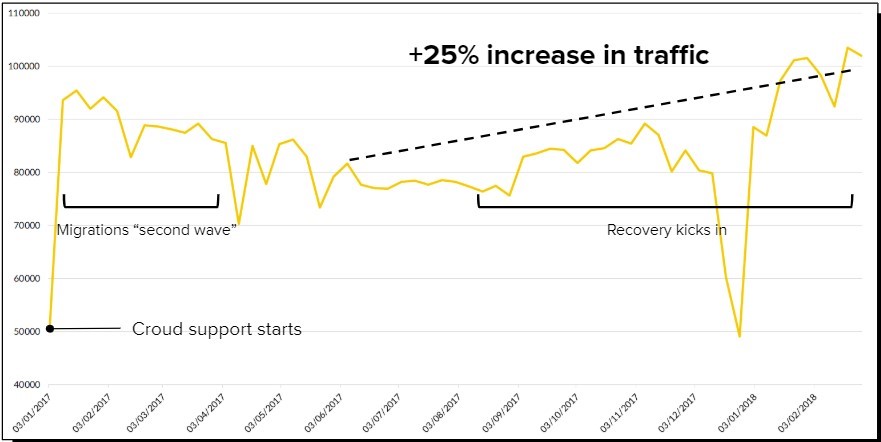

Just in case you’re not convinced, here’s a scary graph of what happens when you don’t do this properly.

Source: Croud

The process of telling Google what’s what extends beyond redirect mapping, it also includes on-page work. Specifically, the canonical tag.

Fun fact: 301 redirects don’t actually stop Google from indexing your pages, so if you left it at that, you would just end up with some poor rankings and some confused users. Luckily, your friendly neighborhood SEO knows all about the various ways to help encourage Google to drop your old page out of the index as it goes along your new site.

Share this article

Related articles

2. Understanding your website’s behavior

So, you’ve done all the mapping and have set up just how to introduce Google to your new site. While that’s very exciting, we do have to remember the “understanding” part of these first several weeks. The primary reason for site migration is to provide a new and improved site that will (hopefully) gain more traffic and drive more business. However, without understanding how your original site performed, it’s very difficult to establish if your new site is actually superior. This, therefore, highlights the importance of benchmarking.

Of course, you may know how much traffic your ad campaigns – and even your website in general – are pulling in, but you’ll need to know more than that to be successful. As SEOs, our aim is to understand your site as much as the search engines do, which as explained above, is much more than just content on your pages.

To paint the best picture of your website before you migrate, use several tools that provide a variety of key SEO data points:

- Keyword rankings and their respective landing pages

- Links to your site

- Pages with 200 (and non-200) status codes

- Crawl volume and frequency

By aggregating the different metrics and views of each tool, you can create a beautiful, detailed portrait of how your website behaves, and how it’s interpreted by both search engines and users. Astute benchmarking will allow for in-depth, helpful post-migration analysis, particularly for those metrics that can only be recorded at a particular moment. There’s no way to tell how fast your pages loaded, or how many pages returned non-200 status codes last week. If you don’t gather this information beforehand, you won’t be able to fully report the impact of the migration.

After you complete the migration, you can gather this data again to truly judge your results. Everyone will remember to check the new traffic statistics, and even the new rankings, but only an SEO will remember to check that those numbers make sense and you haven’t accidentally orphaned half of your product pages. SEOs will make sure users aren’t just on your site, but crawlers are too. With proper data at your disposal, you can set about making iterative improvements which will undoubtedly be necessary.

3. Migrating your tracking tools

All this talk about performance and results is for naught if you can’t actually track any of it. Much like Google’s search engine, Google tools aren’t so keen on supporting your site migration either. Therefore, you have to make sure you’re ready to start tracking the new site, ideally without losing your old data.

Dealing with various tracking tools and codes all the time, an SEO has to be a Google Analytics expert too (it’s commonly a requirement on most resumes). So how do you avoid a scenario in which either you have no historical data and can’t measure the success, or when you have two different accounts and have to do the calculations for performance comparisons by yourself? By making plans to migrate your tracking tools.

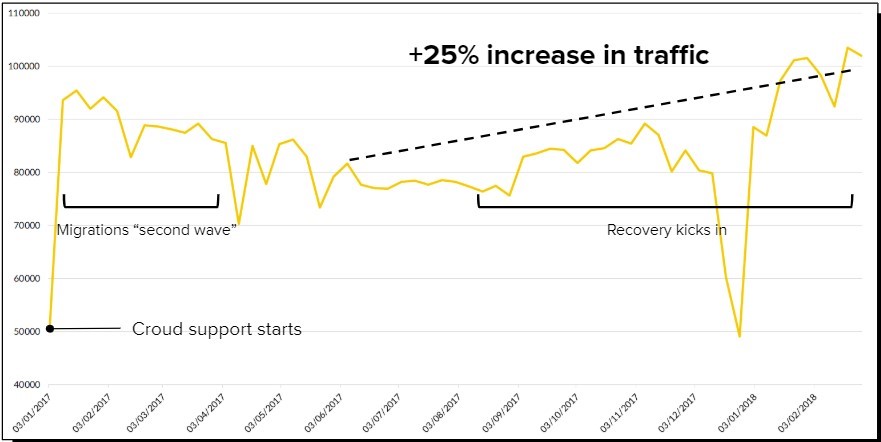

Ideally, you’ll use the same analytics tracking code for the migrating site, so that the old metrics can be directly compared to the new numbers once it takes place. Need some more persuasion?

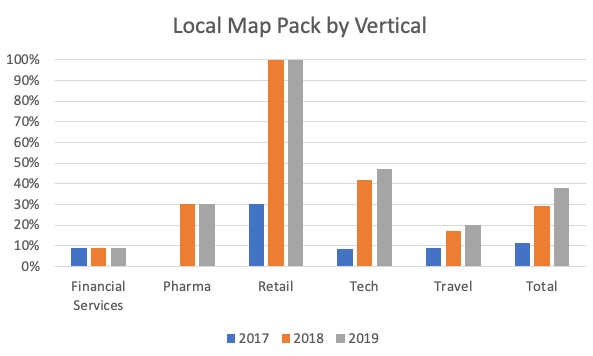

Take a look at this graph detailing a successful site migration.

Source: Croud

4. Testing and the importance of the human touch

So you’ve planned all your new pages, and your new site is built. What’s next? Hopefully, it’s built in a staging environment and not actually live. If it’s not, you run the risk of causing all sorts of issues with duplicate content and ranking cannibalization.

However, your SEO can easily take charge of this with a robots.txt directive (which will haunt them until the site is live and they can change it). Despite its purpose, a staging environment doesn’t always reflect the search engine’s behavior since it lives in isolation. There’s no way to track backlinks or see exactly what it will look like in a SERP at this time.

Often, Googlebot doesn’t even fully crawl staging environments, because it’s seen as time-wasting. Therefore, your SEO’s brain is your very best test.

Everyone will check that the pages are set up as planned, but your SEO will be the one who can thoroughly re-test each individual redirect at 2 am. This will likely be the last time that any mistakes will be recognized before launch, so it’s critical to make sure that every redirect behaves as expected and that they are all 301 status codes.

Lastly, you’ll need to make sure that a single XML file stays live on the legacy site, containing all the legacy URLs. This will be used to push Googlebot through the old URLs and onto the new site, expediting your meticulously-mapped redirects.

5. Launching and mitigating loss

Finally, you’re ready to flip the switch and the champagne bottles are out. So you turn on the new site, and congratulations – you’ve just lost 20% of your traffic.

No, really, congratulations. In case you forgot the daunting chart we shared earlier in this post, website migrations can cause damaging losses, and sites that don’t prepare accordingly, often never recover. However, if you’re smart and you hired an SEO expert to take charge of this project, they’ll have the task at hand.

Your traffic loss is a product of search engines and users not recognizing your new site – temporarily. Your SEO will have made sure everything is set up properly, so Googlebot is quickly figuring out that your new site contains all the same high-ranking, trustworthy content as on your old site. It’s still a little miffed at you for changing on it, so you may only get back on the second pages of results.

You’ll still have some further optimizations to do, but it’s much easier to go from page two to page one, rather than page ten to page one.

Just remember, we’re guiding this migration from an SEO perspective. Googlebot is basically a person, so as long as it can read the site, we assume that users will enjoy their experience too.

Kailin Ambwani is a Digital Associate at global digital agency Croud, based in their New York office.

Related reading

Google never confirmed its existence, but many SEOs believe that Google Sandbox exists. So, does it exist in 2019? If yes, how to avoid getting affected?

Now, the free tool “automatically generates top competing sites that it uses as the basis for a more in-depth competitive analysis report,” Alexa.com President Andrew Ramm told SEW.

If you’re not running competitor-focused campaigns right now, you’re definitely missing out. Five steps to set up and run a successful SEM campaign.

Flushing money into advertising without understanding its workflow is not cool. Top hacks every advertiser should use to improve Google Ads performance.