gringom

gringom

Tips to lower brand CPC for greater profitability

In the realm of digital marketing, brand ownership means everything. It’s safe to say that nearly all search advertisers see the vast majority of their traffic and revenue come from their branded initiatives.

Put simply, branded (search engine marketing) SEM is something advertisers need to fully own and focus on optimizing. With that being said, one of the biggest challenges within the brand space is optimizing spend as efficiently and effectively as possible, in relation to CPC (cost per click) levels.

Knowing that branded campaigns are so important for paid search, many advertisers opt to max out their keyword CPC bids. This means that the CPC headroom, or monetary gap between your max CPC bid and your keyword’s average CPC, will be much larger than needed.

In their minds, this ensures that they are capturing the maximum amount of traffic without sacrificing brand real estate. Although the theory behind that approach is technically accurate, these individuals are not being nearly as efficient with client spend. These campaign managers also allow Google and Bing’s algorithms a greater opportunity for charging extra money.

Typically, SEM advertisers fall into this trap for a good reason, because they want to ensure that they are eliminating competition on their client’s branded space.

But, we believe that minimizing the headroom between the max CPC bid and the average CPC over time will allow these advertisers to ultimately cut the spend levels without the sacrifice of user volume or traffic.

What we did to prove this theory

We ran a couple of tests to see how traffic was affected. Initially, we cut our bids in half, but we immediately saw traffic drop off as a result. Then we took a different approach and thought about what would happen if we tried shaving our bids down incrementally on a daily basis? This would allow us to keep a close eye on traffic and impression share, and bid back up if we ever fell below a certain volume threshold. Ultimately, we found that if you play this long-term dance with the search engine, the theory holds true. You can reduce your average CPC, maintain consistent traffic levels, and ultimately lower your keyword bids.

Implementation

Before launching a bid walk down test, it’s important to audit your account for potential risks and to estimate the expected impact. Here are several preliminary checks that we recommend:

- Competitive landscape: Check the auction insights on your core keyword over the past year and note how many competitors are bidding in the auction as well as their average impression share. The higher the competition, the higher the risk, since advertisers will be able to overtake your position without bidding as aggressively. The higher competition also limits the opportunity to drive lower cost, since auction activity is a key component of Google’s quality score algorithm.

- The headroom calculation: Another important check is looking at the past average CPC versus your current bid. The difference between your bid and the average CPC equals the headroom. Larger headroom means more opportunity for incremental efficiency. Because every advertiser lives in a unique digital environment, we don’t have a concrete headroom threshold that indicates an opportunity for cost savings. In general, we have run successful tests with advertisers who have a headroom ratio above eight. As an example, an advertiser with a two-dollar bid and a twenty cent average CPC is a qualified candidate. Our most successful case studies included advertisers with lower competition and a greater headroom spread.

Now for the exciting part. Let’s begin decreasing bids to reduce the average CPC. Keep in mind that this process may take several months, but the long-term benefits can help advertisers gain up to 40% cost savings, which can have a significant impact on profitability and frees up budget for acquiring new customers. Here are the steps:

1. Benchmark position & share metrics

Looking at the past 30 days, determine your average impression share and absolute top impressions share (as called as the new measure for the average position). For most advertisers, these metrics hover between 90-99%.

2. Launch engine bidding rules

Now that we have our benchmark share of voice metrics, we can launch two engine rules to help automate the bid walk down process. Google/Bing react poorly to major shifts, so we are going to set rules that only change our keyword bid incrementally up or down per day.

- The bid down rule – Create filters to isolate down to your core keyword and set a daily rule to decrease the max CPC if – average impression share > X, and absolute top impression share > X using yesterday’s data.

- The bid up rule – Stay at the core keyword level, but now set a rule to increase the max CPC if average impression share < X and absolute top impression share < X using yesterday’s data.

It is imperative to set both rules to run each morning. So long as your core term is maintaining adequate search impression volume, the bid down rule will make incremental adjustments daily. Once your impression share falls below your set threshold, the bid up rule will increase your bid every day until you have regained share. We set the impression share rules plus or minus a small percentage from their actuals so that the rules have more room to make changes. For a more conservative approach, set these rule thresholds to an exact percentage over the past 30 days.

Over time, you should expect to see your max CPC get closer to your average CPC, which will reduce overall cost without losing any volume. Advertisers with high auction pressure should check their core keyword daily (auction insights, impression share metrics, and live search results) to ensure that competitors are not outbidding your brand.

To conclude, as an advertiser, it is imperative to recognize the volatility of the search engine landscape. There are a lot of moving parts with branded real estate, some easy to control, and some not. This process will help you capitalize on greater opportunities without leaving anything up to chance.

Lowering brand CPC’s isn’t an overnight process by any means. But if you are willing to take a gradual approach towards efficiency, you will save your client significant money without the sacrifice of impression share on your keywords.

Steven Oleksak is Senior SEM Manager and Nicolas Ross is an SEM Coordinator at PMG Advertising Agency.

Related reading

A step by step guide (with lots of pictures) for getting started with Facebook dynamic ads. How to set them up, and tips for using them successfully.

The internet has 4.1 billion users and you’re missing out if you’re not retargeting. Learn the art of customer retention with Google Dynamic Remarketing.

About 60 percent of B2B companies acquired customers through LinkedIn paid ads. Ace B2B paid advertising with these strategic tips on execution.

AI delivers the ultimate luxury

Artificial intelligence and machine learning are increasingly important aids in marketing and retailing decision-making. Image credit: Pattern89

Artificial intelligence and machine learning are increasingly important aids in marketing and retailing decision-making. Image credit: Pattern89

By R. J. Talyor

When you think of the word “luxury,” what immediately comes to mind? Fine jewelry? Upscale hotel rooms? Haute couture?

Up until now, luxury has been defined by a “more is more” mantra. But what exactly we want more of has changed. Now, the ultimate luxury is time.

Busy-ness has defined the 21st century and luxury brands are certainly facing this challenge head-on.

Fast fashion, counterfeits and mass luxury draw dollars away from luxury, while endless meetings, longer to-do lists and mobile devices dull our attention.

Meanwhile well-funded competitors emerge daily to compete with legacy luxury customers. With more competition and less time, how can luxury brands learn to differentiate? That is where artificial intelligence (AI) can help.

According to Adobe, 47 percent of digitally mature brands have an AI strategy. But what about the other 53 percent? How are luxury brands using AI to build the next chapter of their legacy?

AI is a transformative part of the key tool in a luxury brand’s toolbox for three key reasons:

AI automates time-consuming repetitive tasks

The best way to save time with AI is to look for the places where teams are spending hours on manual calculation.

Whether it is in pivot tables, crunching numbers in Facebook Ads Manager or combining multiple spreadsheets into a single source of truth, AI can help. By finding the biggest time suck first, the impact can be felt faster.

Using AI lets humans stick to what they are good at

While AI tries to be creative, only humans can create the next Colin Kapernick Nike campaign or fashion week sensation.

By delegating things such as number crunching, ad budget optimization or even email subject line testing, humans can stay busy doing what they do best: creating the next big idea.

Sometimes AI really does know best

There are some things robots are just better at doing. A really big one is looking at data and making cold, unemotional decisions.

Robots can find ad sets that underperform, help you optimize your ad spend and tell you which headline will perform best out of the 10 options you came up with. But AI will not do the best job of coming up with the headline in the first place.

Notice a theme emerging here? AI has some serious strengths. Strengths that make life better for humans. So why are so many luxury brands hesitant to adopt AI?

The answer is short, but not simple: fear.

Many luxury brands are afraid of a brand defined by machines. Others are unwilling to let go of busy-ness.

These fears keep luxury brands locked in doing the same thing we were doing last week or last month because it is safe and predictable.

But, in the world of luxury retail, when was the last time you said “that safe, predictable brand is killing it?”

ARE YOU going to be the luxury marketer who masters artificial intelligence to reclaim the only true luxury: time?

R.J. Talyor is founder/CEO of Pattern89

R.J. Talyor is founder/CEO of Pattern89

R.J. Talyor is founder/CEO of Pattern89, Indianapolis, IN. Reach him at rj@pattern89.com.

Facebook Messenger to get new lead gen templates, appointment booking

Messenger’s new lead gen template.

Messenger is rolling out two new features for businesses: lead generation templates and an appointment booking interface that will integrate with calendar platforms. The new tools were launched during Facebook’s F8 Developers Conference that kicked-off on Tuesday.

The lead gen templates are housed directly in Facebook’s Ads Manager platform. Businesses will be able to create an automated question and answer flow that will be delivered via Messenger when someone clicks on a News Feed ad offering a chat option. The booking feature involves an interface within the Messenger Platform API and can be integrated with calendar systems so that customers can see a business’s availability and book their appointment based on open time slots.

Why we should care

Both new features offer brands the opportunity to close the communication gap with potential customers, giving them a personalized experience via Messenger. Facebook reports General Motors was able to generate 3,000 leads in eight weeks time via the lead gen template they created with their development partner Smarters.

Sephora had early access to the appointment book feature in Messenger and worked with Assist to build its booking experience. The beauty company saw a 50 percent increase for in-store bookings compared to other channels using the new Messenger feature.

More on the news

- Both General Motors and Sephora teamed up with development partners to create their automated Messenger experiences, highlighting the need for brands to work with companies that understand the technology and nuance of creating automated experiences.

- Facebook said both features are still in beta, but will launch later this year.

- Facebook reports users and businesses are currently exchanging over 20 billion messages over Messenger each month, according to their internal data from April.

How to check for duplicate content to improve your site’s SEO

Publishing original content to your website is, of course, critical for building your audience and boosting your SEO.

The benefits of unique and original content are twofold:

- Original content delivers a superior user experience.

- Original content helps ensure that search engines aren’t forced to choose between multiple pages of yours that have the same content.

However, when content is duplicated either accidentally or on purpose, search engines will not be duped and may penalize a site with lower search rankings accordingly. Unfortunately, many businesses often publish repeated content without being aware that they’re doing so. This is why auditing your site with a duplicate content checker is so valuable in helping sites to recognize and replace such content as necessary.

This article will help you better understand what is considered duplicate content, and steps you can take to make sure it doesn’t hamper your SEO efforts.

How does Google define “duplicate content”?

Duplicate content is described by Google as content “within or across domains that either completely matches other content or are appreciably similar”. Content fitting this description can be repeated either on more than one page within your site, or across different websites. Common places where this duplicate content might be hiding include duplicated copy across landing pages or blog posts, or harder-to-detect areas such as meta descriptions that are repeated in a webpage’s code. Duplicate content can be produced erroneously in a number of ways, from simply reposting existing content by mistake to allowing the same page content to be accessible via multiple URLs.

When visitors come to your page and begin reading what seems to be newly posted content only to realize they’ve read it before, that experience can reduce their trust in your site and likeliness that they’ll seek out your content in the future. Search engines have an equally confusing experience when faced with multiple pages with similar or identical content and often respond to the challenge by assigning lower search rankings across the board.

At the same time, there are sites that intentionally duplicate content for malicious purposes, scraping content from other sites that don’t belong to them or duplicating content known to deliver successful SEO in an attempt to game search engine algorithms. However, most commonly, duplicated content is simply published by mistake. There are also scenarios where republishing existing content is acceptable, such as guest blogs, syndicated content, intentional variations on the copy, and more. These techniques should only be used in tandem with best practices that help search engines understand that this content is being republished on purpose (described below).

Source: Alexa.com SEO Audit

An automated duplicate content checker tool can quickly and easily help you determine where such content exists on your site, even if hidden in the site code. Such tools should display each URL and meta description containing duplicate content so that you can methodically perform the work of addressing these issues. While the most obvious practice is to either remove repeated content or add original copy as a replacement, there are several other approaches you might find valuable.

How to check for duplicate content

1. Using the rel=canonical <link> tag

These tags can tell search engines which specific URL should be viewed as the master copy of a page, thus solving any duplicate content confusion from the search engines’ standpoint.

2. Using 301 redirects

These offer a simple and search engine-friendly method of sending visitors to the correct URL when a duplicate page needs to be removed.

3. Using the “noindex” meta tags

These will simply tell search engines not to index pages, which can be advantageous in certain circumstances.

4. Using Google’s URL Parameters tool

This tool helps you tell Google not to crawl pages with specific parameters. This might be a good solution if your site uses parameters as a way to deliver content to the visitor that is mostly the same content with minor changes (i.e. headline changes, color changes, etc). This tool makes it simple to let Google know that your duplicated content is intentional and should not be considered for SEO purposes.

Source: Alexa.com SEO Audit

By actively checking your site for duplicated content and addressing any issues satisfactorily, you can improve not only the search rankings of your site’s pages but also make sure that your site visitors are directed to fresh content that keeps them coming back for more.

Got any effective tips of how you deal with on-site content duplication? Share them in the comments.

Kim Kosaka is Director of Marketing at Alexa.com.

Further reading:

Related reading

How to create unique articles that encompass major on-page SEO elements, and touch the reader? A streamlined process, key benefits, and screenshots listed.

YouTube is not just a social media platform. It’s a powerful search engine for video content. Here’s how to make the most of its SEO potential.

In part three, we will learn how to automatically group pages using machine learning to recover SEO site traffic using Python.

Quora, Pinterest ads pixel integrations now available in Google Tag Manager

Quora’s Google Tag Manager integration.

Pinterest and Quora are now approved Google Tag Manager vendors, making it easy for marketers to manage their Pinterest and Quora Pixels via Google’s platform.

Why we should care

The native integrations for Quora and Pinterest makes it much easier to set up those pixels in Google Tag Manager (GTM) to track ad campaign performance from those channels. No more having to create a custom HTML tag in GTM.

Within GTM, you can set up your pixels from channels to track user behaviors such as viewing a piece of content, or adding items to the cart, without having to alter the code base.

Currently, Pinterest and Quora’s Google Tag Manager integrations only support tacking from websites not apps, according to Google’s supported tag manager list.

More on the news

- Both Pinterest and Quora shared quick steps for adding each platform’s tags into your Google Tag Manager account: Pinterest instructions here. Quora instructions here.

- Google Tag Manager currently supports more than 80 websites natively, including LinkedIn, Twitter, Adobe Analytics, Microsoft Ads and more.

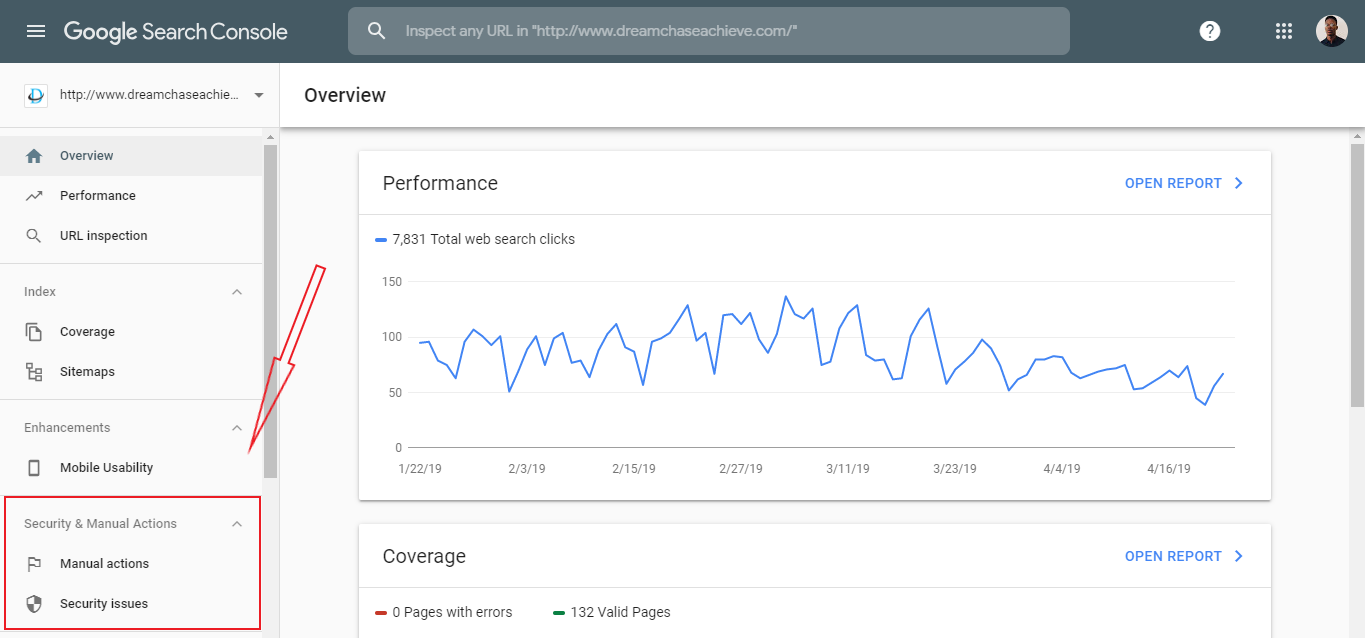

How to take advantage of the latest updates to Google Search Console

After testing the Search Console for more than a year, Google announced its release from beta last year.

In the previous year, maybe more, Google slowly rolled out the beta eventually doing a full open beta invite to all Search Console users and migrating their features from the old to the new version. From the new UI to the new features, the tool is currently performing at its best.

But it’s difficult to keep up with Google Search Console updates, let alone integrate them into your search marketing mix. However, because SEO is ever evolving, these updates always come at a good time. The following is a guide on the newest features you might not have heard of yet, and how to make use of them to improve your search marketing.

1. Improved UI

The main source of confusion surrounding the new version of the Search Console has been how Google is handling the transition. For starters, not all features have been moved directly into the new version. All features and the reports they provide are being evaluated so they can be modified and presented to handle the modern challenges facing the SEO manager. Google even published a guide to explain the differences between the two versions.

Overall, the tool has been redesigned to provide a premium-level UI. As a marketer, this benefits you in one major way: without the clutter, you’re able to remain more focused and organized.

You can look at reports that matter the most, and even those you don’t have to spend too much time on because they’ve been made briefer. Monitoring and navigation are also more time-efficient.

These may not seem like a direct boost to your SEO efforts, but with this improved UI, you can get more work done in less time. This freed up time can then be channeled to other search marketing strategies.

2. Test live for URL inspection

The URL Inspection tool got an important update that allows real-time testing of your URL. With the “Test Live” feature, Google allows you to run live tests against your URL and gives a report based on what it sees in real time not just the last time that URL was indexed.

Google says this is useful “for debugging and fixing issues in a page or confirming whether a reported issue still exists in a page. If the issue is fixed on the live version of the page, you can ask Google to recrawl and index the page.”

The URL Inspection tool is fairly new. It’s a useful tool as it gives you a chance to fix issues on your page. So Google doesn’t just notice what’s wrong with your page — it also tells you, allows you to fix it, and reindexes the page.

URL Inspection has other features: Coverage and Mobile Usability.

i. Coverage – This has three sub-categories:

- Discovery shows the sitemap and referring page.

- Crawl shows the last time Google crawled the page and if the fetch was successful.

- Indexing shows if indexing is allowed.

ii. Mobile Usability: It shows if your page is mobile friendly or not. This helps you optimize your site for mobile.

Overall, URL Inspection is a handy feature for easily identifying issues with your site. Afterward, you can then send a report to Google to help in debugging and fixing the identified issues. The feature is also useful for checking performance and making sure your site is SEO-optimized and your pages, indexed.

3. Manual actions report

From the menu bar, you can see the “Manual actions” tab. This is where you find the new Manual Actions report that shows you the various issues found on your web page.

As you’d expect, the report is brief and only shows the most important information. It can even be viewed as part of the report summary on the Overview page. If any issues are found, you can optimize it and request a review from Google. The major errors that can be found and fixed from here are mobile usability issues and crawl error.

This feature helps you, as a search marketer, to minimize the amount of time you take to review your website performance. It’s one more step to improving your website speed and overall performance because issues are quickly detected and fixed. And of course, it’s no news that speed is one of the key attributes of an SEO-friendly website.

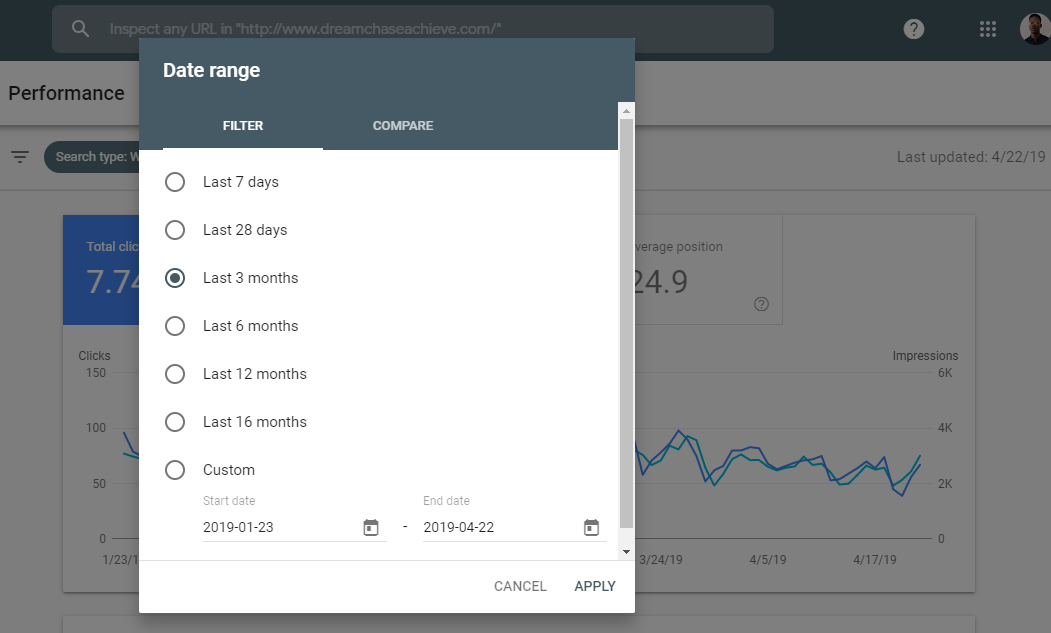

4. Performance report

The “Performance” report feature was the first to be launched in the beta version of the Search Console so it’s been around for more than a year. It replaces Search Analytics and comes in a new and improved UI.

Compared to Search Analytics, the main strength of the new report is in the amount of search traffic data. Instead of 3 months, the Performance report incorporates 16 months of data. This data includes click, CTR, impression, and average ranking metrics at all levels (device, page, query, and country).

You can use this data to optimize your website, improve mobile SEO, evaluate your keywords, check content performance and more. All these activities help improve your SEO.

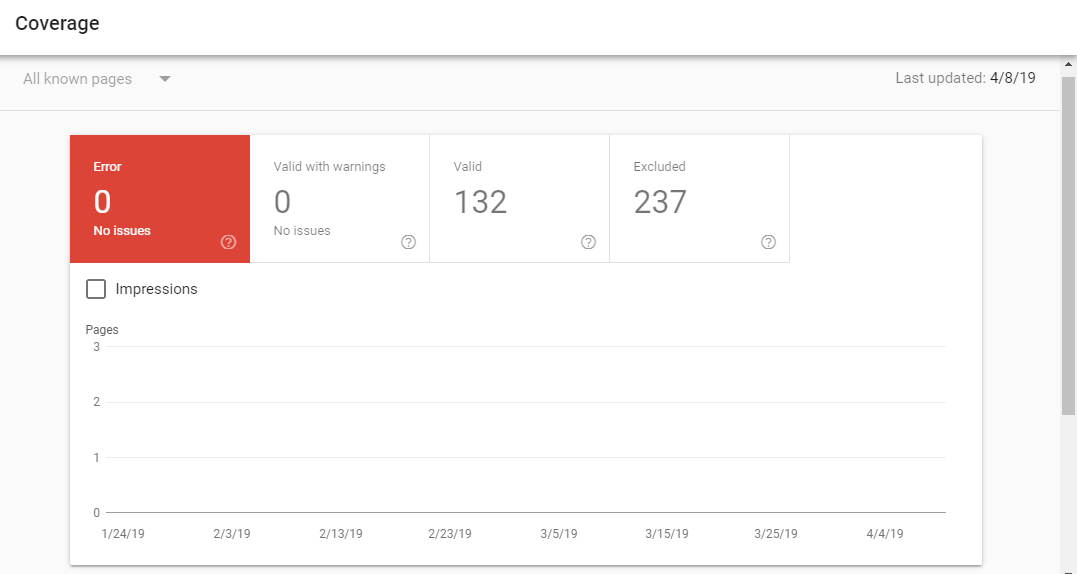

5. Index coverage report

Index Coverage was launched alongside the Performance report. It’s an evolution of the previous Index Status and Crawl Errors reports.

Providing site-level insights, the Index Coverage report flags problems with pages submitted in a sitemap and provides trends on the indexed pages, those that can be indexed.

By allowing you to see the indexing and crawling issues from Google’s perspective, the report pinpoints the problems limiting your ability to rank high on the SERP.

Conclusion

The Google Search Console will continue to be one of the best free SEO tools out there. Every new feature adds to it a new ability to help marketers better manage their SERP appearances. If you care about where and how you appear on search engines, these and any future updates, including how to use them, will be of much interest to you.

Joseph is the Founder and CEO of Digitage. He can be found on Twitter .

Related reading

YouTube is not just a social media platform. It’s a powerful search engine for video content. Here’s how to make the most of its SEO potential.

In part three, we will learn how to automatically group pages using machine learning to recover SEO site traffic using Python.

A new analysis of 73,000 business listings found that 96% of all business locations fail to list their business information correctly.

Bing Ads rebrands as Microsoft Advertising

First there was Microsoft adCenter. Then there was Bing Ads. Now there is Microsoft Advertising.

Why we should care. The rebrand emphasizes a focus on personalization and AI. “In the next year, we’re introducing more advertising products with built-in AI, more connected to your data and your business,” Rik van der Kooi, corporate VP for Microsoft Advertising, said in a blog post Monday.

It’s a bit of a back to the future move with a return to using the broader (and resurgent) Microsoft branding to signal offerings that extend beyond search inventory and search data.

“It’s a simple shift because our clients and partners already know us as Microsoft, and many are already tapping into our new advertising products that go above and beyond search, such as the Microsoft Audience Network.”

Microsoft Audience Network (MSAN) launched almost exactly a year ago. The AI backbone that powers Bing has given the company the “right to innovate,”David Pann, general manager of global search business at Microsoft said during a keynote discussion at SMX East last year. He cited MSAN and LinkedIn integrations as one example.

Interestingly, LinkedIn was not named in Monday’s news. Microsoft started integrating the audience data graphs of LinkedIn and Microsoft in 2017 and made LinkedIn data available for targeting in Microsoft Audience Network and then search ads last year.

Reflects several changes. The last rebranding came out of an initiative to focus on specifically on search advertising. The Bing Ads branding replaced adCenter in 2012 at the same time as Microsoft and Yahoo dubbed their search alliance the Yahoo Bing Network.

Where once Yahoo’s name preceded Bing’s in that search alliance, Yahoo — now under Verizon Media Group — ceded search ad delivery to Bing last year in a deal that made Bing Ads the exclusive search adverting platform for Verizon Media properties, including Yahoo and AOL. That deal also pushed Google out from serving any slices of that inventory.

Also part of that deal, the Microsoft Audience Network (the early harbinger of this broader rebrand) gained access to inventory on Verizon Media properties with that new deal.

In similar fashion, Google’s brand change from AdWords to Google Ads last year reflected the platform’s evolution from keyword-based search ads into one that supports many different ad formats — text, shopping, display, video, app install — across Search, YouTube, Gmail, Maps and a network of partner sites and apps

What else is new? The news comes as what had been dubbed the Bing Ads Partner Summit kicks off at Microsoft’s Redmond, WA headquarters this week. With this announcement, the Bing Ads Partner Program is now the Microsoft Advertising Partner Program.

The Bing brand is sticking around: “Bing remains the consumer search brand in our portfolio, and will only become more important as intent data drives more personalization and product innovation.”

Sponsored Products were also announced Monday. Sponsored Products allow manufacturers to promote their products in shopping campaigns with their retailer partners. “Manufacturers gain access to new reporting and optimization capabilities, and retailers get additional product marketing support with a fair cost split.” Sponsored Products is in beta in the U.S. only at this time.

Market share stats. Microsoft Advertising said it has 500,000 advertisers. For a bit of reference, Google passed the million advertiser mark in 2009. Facebook said last week that 3 million advertises are using Stories Ads alone.

It also said it reaches more than 500 million users and that Bing’s search share growth has grown for 100 consecutive quarters, according to comScore custom data.

This article originally appeared on Search Engine Land. For more on search marketing, click here.

Privacy could be hurting Facebook Portal sales

When Facebook’s smart display was released last year, it received mixed-to-positive reviews. However, a substantial number of them also digressed into the privacy and personal data controversies that have recently surrounded Facebook. A review from The Verge offers a representative example:

It’s not often that a new gadget gets announced and I don’t immediately want to get my hands on it. I am an extreme early adopter, both by profession and by inclination. But when Facebook’s new Portal and Portal Plus were announced a month ago, my response was a firm “no thanks.” And I’m not alone: after a year of data privacy scandals, many people’s first reaction to the Portal, a smart display device that has an always-listening microphone and always-watching camera, landed somewhere between hesitation and revulsion.

Portal currently comes in two smart display versions

Late to the market. Facebook was late to the market with the smart display device (powered by Alexa) and that fact undoubtedly hurt its position. But there is now data suggesting people may be staying away because of privacy concerns.

Facebook’s Q1 2019 earnings this week beat Wall Street expectations. The company reported nearly $15 billion in ad revenue, up 26 percent year over year. Advertiser attitudes and Facebook revenue have not been negatively impacted by the various and widely reported privacy scandals – to the surprise of some. But Portal may be a different story, and there’s evidence that these privacy controversies are taking some toll on Facebook’s relationship with consumers.

Hardware revenue down YoY. Facebook doesn’t break out hardware revenue; it resides within Payments & Other Fees. Those revenues in Q1 were $165 million, which was down 4 percent from last year (VR hardware is part of that revenue as well). And while we have no way of knowing specifically about Portal sales, the evidence suggests they’re not moving very well.

Beyond the Q1 category revenue decline, the company recently dropped the price of both portal models by $100. And in multiple surveys, consumers have indicated that privacy is a growing concern when it comes to smart speakers and smart displays.

A 2019 PC Magazine survey of 2,000 U.S. adults found that privacy topped the list of concerns about smart home products. Privacy was also at the center of a Common Sense-Survey Monkey poll about parents’ concerns about their children’s use of virtual assistants and smart speakers. A third survey from IBD/TIPP found that more than 70 percent of respondents were worried about smart speaker privacy. And there are more such surveys.

Why we should care. The smart speaker and display market right now looks a lot like the smartphone market – with two dominant companies in control: Amazon and Google. Apple is also in the running with its HomePod; and, as of this moment, so is Facebook.

If the social media company wants to compete in the smart home/smart speaker market, it will need to do a number of things: create a truly great device, price it aggressively and go above and beyond to offer privacy protection. Simple assurances are not enough.

Amazon advertising growth slowed again in Q1: Does it matter?

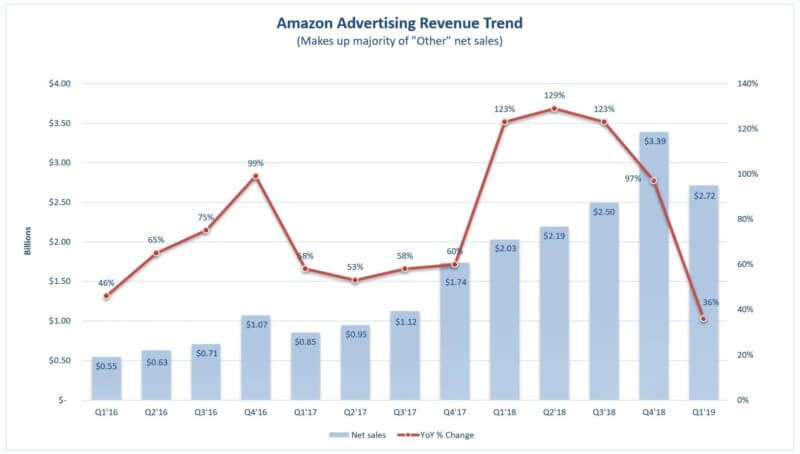

Data source: Amazon

For a few quarters there, Amazon was posting triple digit growth rates. Then growth slowed to 97% in the fourth quarter of 2018 and Thursday, the company reported just 36% growth in its advertising business line for the first quarter of 2019.

Amazon reports advertising business under an “Other” category. And while advertising accounts for the majority of that category, Amazon said that the advertising business actually grew “a bit more” than 36% in the first quarter. Another thing to note is an accounting change puts previous quarters in a more favorable light, so the actual separation isn’t as big as it looks. All that said, Amazon is clearly invested in growing its advertising and it still very early days for that piece of the business. Here is what Amazon had to say about it on Thursday’s earning call.

Focus on ad relevancy. “I would say really what we’re focused on right now is driving relevancy, ensuring that we service the most useful ad as possible. I think that’s going to be the best experience for customers and also for advertisers.” Brian Olsavsky, Amazon’s CFO, said on the earnings call when asked about the deceleration over the past two quarters.

Building tools to make buying easier. “So most of our focus has been on again adding more functionality, adding more products and adding — reporting for businesses and advertisers — so they can understand the incremental customers they’re seeing on Amazon through advertising with Amazon,” said Olsavsky. “So it’s more right now about tools and making better recommendations, making it easier to use our Amazon demand-side platform, things like that, operational improvements.”

Support for brands. “And then, I guess, we’re very focused on serving brands as well. That’s another theme that we have,” Olsavsky said. “These brand stores that we have are easy to create, customize, and we’ve had great pickup on that from brands, but they can show shoppers who they are and tell their story. So it builds a better engagement for the brand and the customer. It builds better customer loyalty both to that brand and also to Amazon.”

Still a nascent business. “I would just say, we’re early on in this venture. There’s a lot of — it’s having a lot of pickup by both vendors, sellers and also authors. So again, we feel like if we work on the inputs on this business and continue to grow traffic to the site, we will have a good outcome in the advertising space.”

Why we should care. So is this deceleration just reflection of growing pains? It’s likely we’ll continue to see growth ebb and flow as Amazon continues to invest in the platform and products. Amazon has made several changes that reflect the goal of improving “efficiency and also performance of the advertisement themselves” that Olsavsky noted. In September, it streamlined its ad products in an effort to simplify ad buying in the vein of Google and Facebook’s ad platforms New display and video formats, more inventory for Sponsored Products and Sponsored Brands ads.

Among its clients, Merkle reported that Amazon advertisers saw sales attributed to both Sponsored Brands more than double year over year, as spend grew 19% and 77% for those formats respectively. More than half of spend on the Sponsored Products came from placements other than the top-of-search results, said Merkle.

Speaking of investments, Amazon also dropped the news that it is investing $800 million to default to one-day shipping for Prime members, instead of the current two-day shipping offer.